Voice chat in Unity and the problems therein

If, by the tribunes' leave, and yours, good people,

I may be heard, I would crave a word or two;

The which shall turn you to no further harm

Than so much loss of time.

So. It's been a while! This was a busy year for MeXanimatoR. I'll need to write a wrap-up post for the 2023-24 academic year. But first, I'd like to go over some of the details in the voice chat system's ongoing development process. Be forewarned, this will be more documentative than instructional. The journey is the destination, or something like that.

As a reminder, MeXanimatoR started as an asynchronous experience: recording a player's body movement and audio as a digital performance allows you to play it back and perform as another player in the scene, casting and layering the performances in a layered fashion. Microphone access and recording as an AudioClip, then saving to storage has been functional for a while. By playing the AudioClip from the recorded player's head position and using 3D sound, the spatialization of audio makes you feel like you're really sharing a space with another person.

Incorporating live multiplayer between separate devices necessitates a system for transmitting voice. Body movement is synchronized using NetworkBehaviour transforms as part of the player objects, but there was no system in place for broadcasting voice. This was fine, at first. Initial tests and demos all made use of the same physical space for multiple players, close enough in proximity that they can simply hear one another. This presents other problems related to synchronization between play spaces, physical bodies, and avatar placement, but that's another post on my todo list related to co-location in VR.

Back to voice chat over the network, with the assumption that players are in a separate space: how do we do it? My first approach to this was to try Dissonance, a plugin in the Unity Asset Store. In the development of MeXanimatoR, I quite often looked at existing solutions to solve portions of the system whenever applicable. This choice in itself is a fulcrum of thinking in programming: when should we approach a problem as a configuration of existing tools, and when should we really dive into the details and develop our own system? This Twitter post has an interesting perspective on it:

You either concieve of programming as data processing, or you concieve of it as the stitching together of black boxes (libraries and services).

— Hasen Judi 🇮🇶 🇯🇵 (@Hasen_Judi) May 8, 2024

Programming education should teach you how to make behavior emerge via data processing.

BUT, as you begin learn to program, you can't… pic.twitter.com/BmOLNAgvxP

That said, knowing how and when to apply both techniques is important. But Dissonance comes highly regarded in just about every Unity community I've encountered, and it solves a ton of problems. Our networking system comes from Fish-Net, another plugin, and there's a community-provided integration between the two. Huzzah! I installed and configured the plugin, and after an hour or so of tinkering, I had voice chat coming through on two local instances! On that note: testing network functionality can be tricky. I've been using ParrelSync to clone the project for running separate Unity Editor instances with one as host and the other as client, and this has been the fastest and easiest system.

So here's where the issues began. Despite being in a LAN environment with tiny frames (10ms), voice chat with Dissonance creates a latency of something like 450ms. It's wild! Where's all this coming from? Especially when the estimated latency should be much better: this writeup claims a latency of roughly 75ms while also addressing that Unity's going to add around 100ms. While 150-175ms is better than what I was encountering, it's still rather bad for timing-critical performances. And as it happens, Unity's default Microphone API has some fairly significant lag, too. Putting something like this in your Start() function:

var audio = GetComponentInChildren<AudioSource>();

audio.clip = Microphone.Start("Microphone", true, 10, 48000);

audio.loop = true;

while (!(Microphone.GetPosition(null) > 0)) { }

audio.Play();

lets the player hear their own voice, with a delay of almost 1 second. I came across a few other posts talking about this issue, many of whom were concerned with Quest 2 development, so it's a nice indicator of standalone VR development. But I've been on my Windows machines up to this point and dealing with the same problem!

By default, Dissonance uses the Unity Microphone calls to handle voice access. They offer an FMOD plugin that is supposed to help with this kind of delay. I've never worked with FMOD before, but the Dissonance devs state that it can provide faster access to the recording device and avoid the overhead from Unity's Microphone call. In trying it out, the delay did improve, but we were still around something like 200ms. Something else was going on. Turning on debug mode in Dissonance, I started getting warnings that echoed closed issues on the Dissonance GitHub: "encoded audio heap is getting very large (46 items)" and the like. Into the past issues and Dissonance Discord I dove. A Discord thread referred to a similar problem that just so happened to involve the Fish-Net plugin and the community-provided integration layer. There was also an issue with player position tracking for correct audio spatialization related to network player Ids not being available at the right moment. I managed to fix this by implementing my own component for the IDissonancePlayer interface, but it did speak of other issues that might appear.

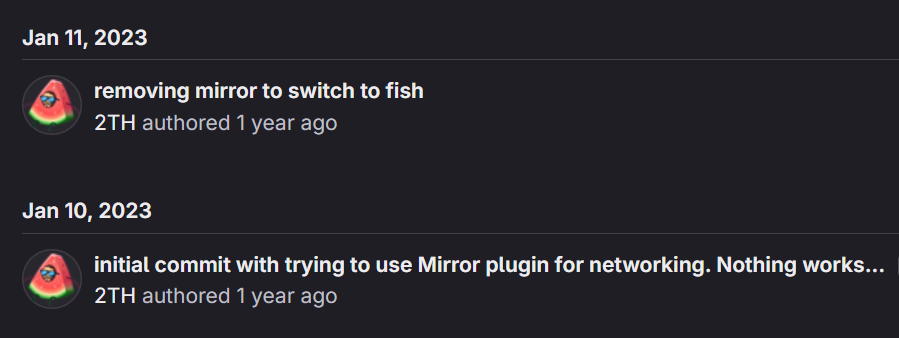

As a test, I made a new project that used Dissonance and Mirror for the network system, which has an integration layer provided by Dissonance. Combined with FMOD, this did perform much better: I think it was around 60-70ms. Mirror was actually my first choice for MeXanimatoR's networking system back when I started toying around with it. There were some issues as summarized in this amusing commit history:

Well, crud. I really didn't want to go back to Mirror from Fish-Net after having developed a considerable number of networking components for it. So began the old, dark thoughts once more: what if we didn't use Dissonance at all? We'd lose all of its (very nice and helpful) features at the cost of having to make some ourselves, but could be handle this in the data-processing manner and not depend on the laggy black box?

That brings us up to speed. Over the last week, I've been toying with FMOD for Unity. At startup, I create a Sound for recording and a Sound for playback. The latency here is much, much better - around 20-30ms between speaking and hearing yourself. Not so much of a speech jammer, but I'll also look into NAudio as another option. So far it's showing improvements that I was hoping for with the Dissonance FMOD plugin.

At the moment, here's what the system can do:

- Start recording and playback for latency testing.

- Access and copy samples from the sound recording on a per-frame basis.

- Save the total recording to storage as an .ogg or .wav file.

- Encode the per-frame samples with Vorbis.

What's left to try:

- Transmitting encoded samples via the network.

- Decoding Vorbis samples from the network back to PCM

- Playing decoded samples live.

- Measuring the latency in this system.

There's still quite a ways to go, but I'm hoping to have most of these things testable before taking a vacation next week. Oh, ambition, you love to take such huge bites.

Direct access has its own cost - we lose some of the conveniences provided by Dissonance, such as audio quality configuration, noise suppression, background sound removal, and adding these back in takes time (both for development and as little increments that add to the voice system's latency). For measuring latency, I've just been using OBS to record the microphone and the desktop audio to separate tracks. Then I extract the tracks with ffmpeg and compare the waveforms in Audacity. It's a bit of a manual process (the coder's anathema), but it works for the moment.

That's all I've got for now! I'm hoping to write more over the summer, if only to try and keep track of all the configurations as I go through them.